Google Trax vs Apache Spark MLlib Notes on machine learning

![]() Chris Thomas 15 Jan, 2021 | 12-15 min read

Chris Thomas 15 Jan, 2021 | 12-15 min read

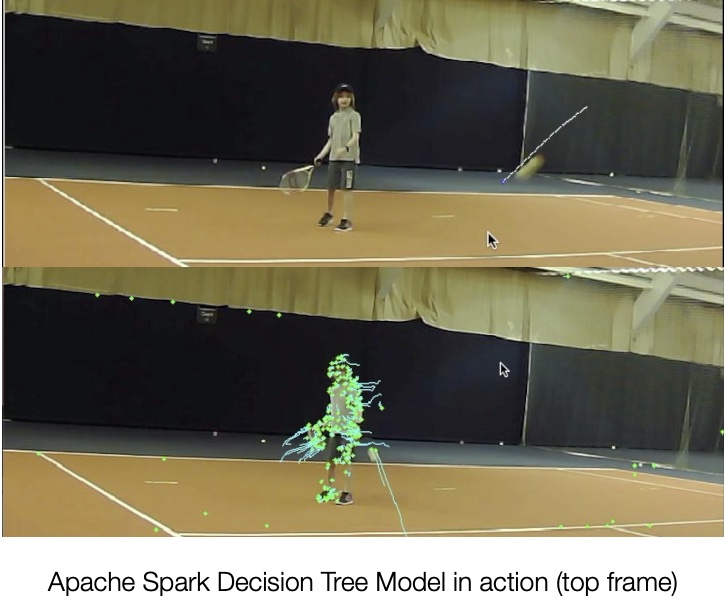

In the past, I'd built a decision tree model out of a smallish labelled dataset and Apache Spark MLlib. Recently, I was introduced to neural networks and the new Google Trax library. It got me thinking ‘How would the two, very different, approaches compare?’ To explore this question, I set about building an equivalent deep learning model to my existing Apache Spark decision tree model, this time with Google Trax.

A little background

Early last year, I started experimenting with Apache Spark MLlib. As a data engineer, building pipelines with Apache Spark day-in-day-out, picking Spark for machine learning was the natural choice for me. Then, at the end of the year, I was introduced to neural networks and the Google Trax library after taking some courses from the excellent Natural Language Processing Specialization by DeepLearning.AI.

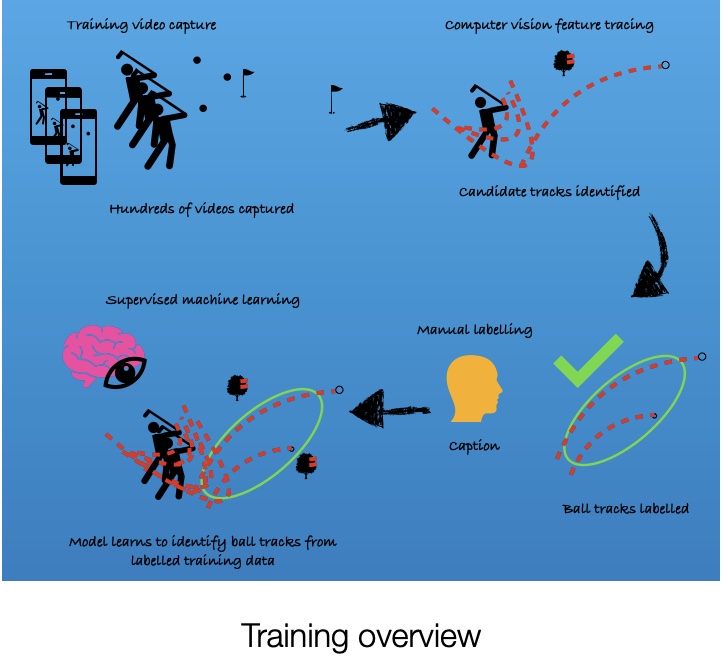

The problem that I chose to use to tackle with machine learning was tracking ball flight in sports videos. Think Hawkeye in tennis and cricket, or TopTracer in golf.

Naturally, the data pipeline required to tackle such a problem must start with some computer vision techniques to track moving objects in a video. I’ll leave the details perhaps for another post, but suffice to say that once the computer vision layer has identified all candidate tracks in a video, the machine learning problem then becomes a case of accurately picking out the ball tracks.

With the computer vision tackled, it left me with a supervised binary classification problem:

- • Supervised - Because the model needed to be trained with data that had the ball tracks labelled as such.

- • Classification - As the model’s job was to correctly classify unlabelled tracks from videos that it had never seen before as either ball tracks, or something else.

- • Binary - Because there were only two possible outcomes that it needed to predict, either a track belonged to a ball, or it didn’t. Simple as that.

I built a visual annotation tool to help label the ball tracks and set about labelling a small dataset of around 10, 30 second, video clips of my nephews and niece playing tennis. Holding back one of the videos for visual testing after I had done the model training and evaluation. The small dataset still produced over 300K tracks. Not such a daunting labelling task as it might initially seem, as the number of ball tracks within the dataset was naturally very small, perhaps only 100 or so.

What follows are some notes on the differences that stood out for me between building a neural network model with Google Trax, having previously built a decision tree model with Apache Spark to tackle the same problem. The notes maybe of interest to data engineers, analysts, or data scientists familiar with machine learning concepts and interested in one, or both, of the above libraries.

Training platform

Both Trax and Spark allow you to start off as a laptop warrior and both have no problem running locally, but when training times start to run into hours, you start looking around for something faster.

Google’s Colab is a community cloud GPU/TPU resource, where you can potentially get access to some powerful compute resource for free. Fantastic. I experienced around a 1.5x speed up compared to my 2 core, 8 GB MacBook. Not blinding, but worth making the switch for. As you’d expect, there are no availability guarantees and no guarantee for how long Google will make this free service available. When you need guaranteed availability, there is of course a pro version, but for now this is only available in the US or Canada 😞

There are also those offering limited free compute resource for Apache Spark, again with the expectation that some will graduate onto paid plans. Databricks has a community service where you can spin up a single mid-tier VM running Spark MLlib for free. However, at 15 GB memory and 2 cores, it is probably only equivalent to your laptop, or less. So for me, it didn’t pull me into the Databricks service, beyond my curiosity just to try out the environment.

Having said that, I have found that so far, manually annotating, or labelling, my dataset has been the bottleneck in supervised learning, not the compute resource i.e. it has taken longer to manually label a video from my dataset than it has to incrementally train the model on the most recently labelled data. So, if I kick-off incremental training alongside a labelling session, it will typically be completed before the labelling task is done. As such, I’ve not yet needed to move off my laptop onto more powerful GPU/TPU resources.

Feature engineering

For my decision tree model, I spent a lot of time, in fact the majority of my time, engineering features that I thought would be relevant for the problem domain e.g. track speed, track length etc. Now, while it’s entirely possible to plug those features into a neural network, it is also possible to get the network to generate the features on your behalf. Really?! Yes, this seemed like a pretty wild concept to me the first time I heard about it. How can the model possibly know better than me which features make sense for the problem at hand? And if it can, then what am I needed for? 🙂

Of course, it turns out that the model doesn’t know anything about the domain, but by creating enough features, or dimensions (100s as opposed to the handful I came up with), and training the parameters associated with them, over time, the combination of features get to be imbibed with meaning as the model is trained. So that, a combination of nodes in the network are activated, or light-up, when it recognises a target. In this case, a ball track.

This option with neural nets, to use an embedding to generate hundreds of dimensions, can reduce the significant time spend manually engineering features. It can also potentially build features into the model that are hard, if not impossible, to come up with from intuition alone.

Cluster configuration

Apart from swapping the Python array handling library, Numpy, for a faster, GPU/TPU targeted version, the same Trax code deployed on your laptop can be moved to a massively powerful TPU pod in the cloud without code change. There is also no need to configure the underlying cluster of GPU/TPUs in anyway. The same cannot be said of Spark. With Spark, significant cluster configuration can be required (number of nodes , number workers per node, worker memory, driver memory, etc, the list goes on.) Although, configuration effort can be reduced by adopting a managed cloud service such as AWS EMR Managed Auto Scaling.

Language support

If you are a Python programmer you are fully supported in both libraries. However, Trax only supports Python, whereas Spark has APIs for Python, Java, Scala, and R. With Python and Scala being the first class citizens as the most popular variant and the native language respectively.

Code simplicity

Trax is designed to help you write clean, readable code. Both models and data pipelines can be written in a few lines of clear code. Spark MLlib is also strong here, helping you write readable code through SQL like, declarative syntax. However, with Spark, the programmer has to be cognisant of the underlying machine cluster that the code is running on to avoid long running jobs, or blow-ups e.g minimising data shuffles between nodes in the cluster, or avoiding aggregating large datasets and overflowing the driver node. In contrast, with Trax, the code can be deployed on a laptop, or a 100+ petaflop, 32 TB HBM machine without any code changes (configuration, or design, or otherwise).

Model evaluation

I found these seemingly limited in Trax. I couldn’t find functions for computing recall, precision, or FScore that I had been used to in Spark. Only accuracy and entropy were available. Writing your own evaluation functions is, of course, an option.

Documentation

Trax is new. First released in Nov 2019, it has just 3 main contributors, plus a handful of co-contributors. It is still being actively developed. The walkthrough, taking you through the definition and training of a sentiment model, is great. However, I have been spoilt with Spark, where the documentation is fantastic. As an engineer, trying to grasp a new API and concepts, I crave examples. The Spark docs have loads of examples for creating, training and evaluating all kinds of ML models. That level of documentation isn’t there yet with Trax. The Trax walkthrough would need to be expanded to cover all the supported models, and a good selection of data pipeline, training, evaluation and prediction examples to compete with the Spark documentation set.

APIs

Both are ok. Both would benefit from making use of the, only reasonably new, Python typing module. Particularly the Spark API. In my experience, the type of an expected input to some of the API functions is not always clear from the signature e.g. Dataframe ‘select’ accepts a column name as a string, but ‘filter’ requires a parameter of Column type.

Both API docs are ok. Although, Trax doc strings were hidden from my IDE behind some configuration indirection, which was pretty frustrating. Spark also used to suffer from this, as the Python API is just a wrapper around the native Scala implementation. However, in recent releases, doc strings have been promoted into the Python wrapper, making them accessible from the IDE.

Hardware

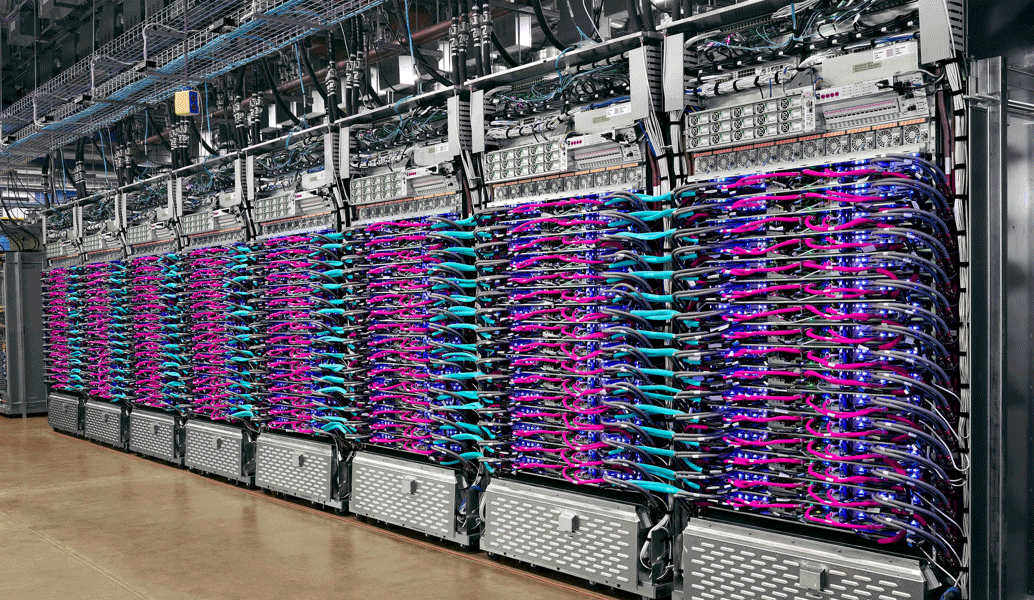

Trax is designed to run on vertically scaled, highly specialised, GPU/TPU pods*. Apache Spark, on the other hand, is designed to run on a cluster of commodity machines/VMs. This is a striking architectural design difference. Sure you can start off with either library running on your laptop, but Trax is designed to scale on highly specialised hardware that must be scaled vertically by adding more GPUs/TPUs. Whereas, Spark is designed to run on commodity hardware that can be scaled horizontally by adding more machines.

Practically speaking, if you are running on a cloud service the difference may not matter that much. However, if you are running on your own hardware, the difference is significant. With Spark you can make use of as much spare computing power that you might own, adding each computer into your own Spark cluster. With Trax, if you want to scale on your own hardware, you're going to have to join the Bitcoin miners and buy a multi-GPU rig, at considerable cost.

Community support

There is an active, if small, Trax community where I was able to get help with my nubie questions. The community feels small enough that you might get a response from one of the main contributors and active enough that you should get a response to your questions. Spark also has an active community mailing list, where I’ve been lucky enough to get a response from one of the original Spark authors and main contributors.

Performance

So how did the two approaches actually perform? Well, given the small dataset, it was too much to expect great results from either approach and that was pretty much borne out. Comparing a sentiment classifier neural network model built on Trax with the Spark decision tree classifier, visual results with the test video were pretty comparable. Both models gave acceptable recall, but only moderate precision, throwing out a smattering of false positives.

Here is a short demo of the deep learning approach in action.

Some useful sites I found along the way

- 1. A great explanation of the maths by Rafay Khan.

- 2. Not Trax, but a useful guide to implementing binary classification with a library Trax is intended to supersede.

About me

I am predominately a data engineer, but have experience across the majority of the stack (no CSS though 😀)